Auto-test Framework

Abstract

Autotest is a framework for completely automated testing that is primarily built to test the Linux kernel, although it may also be used for a variety of other tasks. Automating the testing process also solves several other critical problems with testing.

- Consistency: It's much easier to ensure that the tests are run the same way they were the last time.

- Knowledge capture: The knowledge of how to execute testing is stored in a system rather than in a single person.

- Sharing: You may quickly share tests with vendors, partners, and the general public.

- Reproducibility: It's been said that getting an easily reproducible test case is 90% of the work in fixing a bug.

Automated testing

Autotest is a framework for testing low-level systems, such as kernels and hardware, in a fully automated manner. It's made to automate functional and performance testing against operating kernels or hardware with the least amount of manual preparation as feasible. Testing may now be done with less wasted work, more frequently, and with greater consistency thanks to automation. Testing is obviously important, but the value of regular testing at all stages of development is maybe less clear. Parallelism of work and finding issues as rapidly as feasible are the two key goals we're aiming for here. These are critical because of the following reasons:

- It prevents the bad code from being replicated in other code bases.

- There are fewer people who are affected by the bug.

- There are fewer people who are affected by the bug.

- It is less likely that the modification will interact with subsequent alterations.

- Should the need arise, the code is simple to remove.

The open-source development methodology, in particular Linux, presents some unique obstacles. The lack of a mandate to test contributions and the lack of a simple funding strategy for regular testing are two issues that plague open-source projects. There is reason to be optimistic; in most cases, machine power is much less expensive than human power. With a large number of testers and a variety of hardware, a useful subset of the available combinations should be covered. Linux as a project has a lot of people and hardware; all it needs now is a framework to organise it all.

What we do here at Encoding Enhancers:

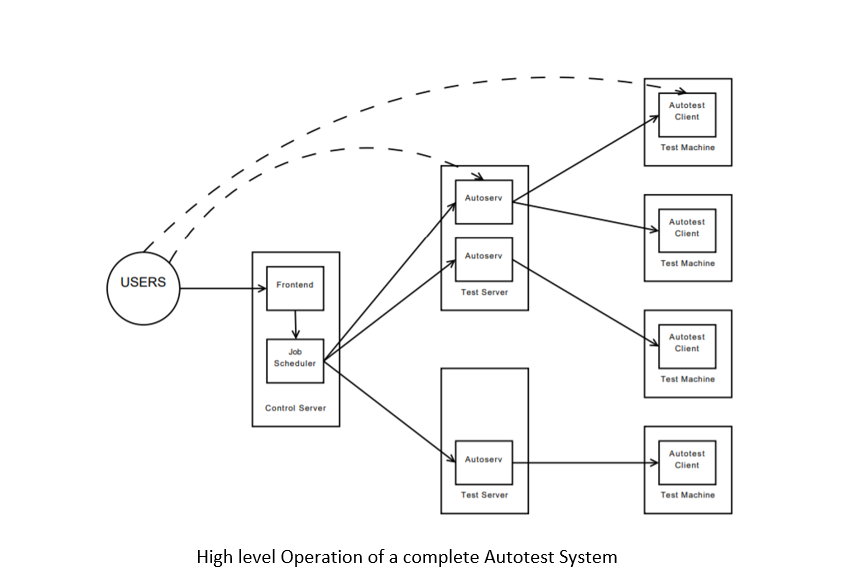

Here at Encoding Enhancers, we use Autotest to simulate data through modbus registers and compare the output to check whether or not we are getting the correct output. We use pymodbus protocol to read and write data to and from the registers. It is a full Modbus protocol implementation using twisted/torndo/asyncio for its asynchronous communications core. In order to execute automated tests, we provide a framework and "harness" for each embedded device. Depending on your needs, Autotest is made up of a number of modules that will assist you in performing stand-alone tests or setting up a fully automated test grid. The following is a partial list of modules: Autotest client: The engine that runs the tests is known as the Autotest client (dir client). Each Autotest test is represented by a python class that implements a small number of methods and is located in the client/test’s directory. If you're a single developer who wants to try out Autotest and run some tests, you'll need the client. The Autotest client runs "client-side control files," which are ordinary Python programmes that make use of the client's API. Autotest server: A programme that copies the client and controls its execution on remote machines. Because the Autotest server may control test execution on different machines, it executes "server-side control files," which are standard python programmes that use a higher-level API. You might wish to use the Autotest server if you want to run tests that are a little more complicated and involve more than one machine. Autotest command line interface: Autotest framework also provide command line interface to run the tests and provide the result.

Problem Faced in Automation:

Testcase Reusability: The amount of manual labour required to create and maintain a library of testcases is one of the most important parameters connected with overall testing velocity in an automated system.

Readable Reporting: It's critical to utilise test scripts that have a low barrier to entry and are relatively straightforward to reuse, as well as test results that are understandable and accessible—otherwise, even the most complete tests won't lead to quick bug fixes.

Testing on Flagship devices: The ability to use the most up-to-date technology is critical for test labs, and any delay in introducing flagship devices into a certain automation workflow might cause test lab operations to slow down.

If the automation supplier is unable to do so, it signals that serious operational challenges may arise in the future.

Achieving High Test coverage: Last but not least, addressing the growing number and complexity of use cases requiring verification in order to achieve a sufficient degree of test coverage is possibly the most crucial difficulty that modern telecom testers confront.

In a network environment that is increasingly bombarded with new devices and protocols, this is easier said than done—but it's critical for avoiding outages, maintaining high network quality, and retaining subscribers.

Any automation framework you're evaluating should be able to demonstrate its capacity to increase test coverage. Inquire with any possible vendors about the number of use cases their solution can handle each day, how fast they can set up a test environment, and how quickly testcases can be programmed.

Future Directions

Autotest has made significant progress in automating kernel and hardware tests. However, test execution is normally part of a qualifying process, and the entire certification procedure is still a tiresome and mechanical affair. A new kernel's qualification usually entails executing a series of functional and performance tests on a large number of machines representing a variety of hardware platforms. The selection of tests to run may be influenced by the results of previous experiments. To discover statistically significant variations, the findings must be compared to those of a known stable kernel. While Autotest abstracts away a lot of the low-level difficulties in these procedures, it doesn't do much to automate the higher-level ones. One of the Autotest project's primary unsolved difficulties is the successful automation of such processes. Fortunately, the frontend's high-level automation capability allows for the prototyping of solutions to these issues. Such solutions can be created on top of the Autotest architecture without requiring Autotest to be modified, and a number of them have already been built to meet the demands of certain Autotest customers. These prototypes show how to add such automation into the Autotest system in the future. Furthermore, Autotest still has a lot of room to improve its reporting. Although Autotest’ s current reporting interface may generate a range of results that span several tasks, it still necessitates a significant amount of manual effort to draw relevant high-level conclusions and makes triage of failures difficult. Autotest needs to provide improved automated folding of vast quantities of data into smaller, more succinct reports that highlight important quality deviations while hiding the remainder of the data to help with the first goal. Autotest needs to improve how it categorises and organises test output, as well as how it directs users to the locations where failure details are most likely to be found, in order to make triaging failures easier.

Reference: